- Visibility 33 Views

- Downloads 7 Downloads

- DOI 10.18231/j.ijca.2025.001

-

CrossMark

- Citation

The rapid evolution of artificial intelligence in anesthesiology brings promising opportunities and significant ethical challenges that should be considered for transparency and accountability in AI-driven decisions. AI has emerged as a powerful tool that could change the way patient care, research, and medical education are approached in the field of anesthesia. These advances bring exciting possibilities while raising important questions about responsible implementation.[1]

AI provides numerous benefits for anesthesiologists and their patients. AI algorithms can be applied for postoperative complications prediction, personalized drug dosing, and more precise vital sign monitoring. As an example, machine learning models can be used to estimate postoperative mortality and morbidity and can thereby facilitate more tailored risk stratification and treatment.[2] In the pain management field, AI systems can be used to analyze patient data in order to suggest the best possible analgesia regimens and could decrease opioid use while enhancing patient outcomes.[3] AI can also be used to optimize simulation-based training for residents of anesthesiology, creating a more thus, adaptive learning experience.[4]

The integration of AI in anesthesiology raises numerous ethical dilemmas and challenges that must be carefully handled. The legal consequences of using AI in anesthesia are important and complex. One of the most important concerns is accountability and responsibility. In such cases where AI-assisted systems are involved, deciding who is responsible for the decisions made by these systems is a complex issue. If an AI system recommends an inappropriate drug dosage or fails to detect a critical change in a patient's condition, the liability question arises.[5] Who is liable: the anaesthesiologist, who possibly relied on the recommendations given by the system; the hospital; or the AI developers, who designed the system? This is another challenge that needs clear guidelines in order to ensure accountability properly. Of this ambiguity of responsibility, there may be a consequence that undermines trust in AI-guided anesthesia management.

Another major issue is transparency and trust. In medical practice, the need for transparency is necessary for maintaining trust among patients, colleagues, and regulatory bodies. If AI tools are used in clinical decision-making, then all the stakeholders must be informed of its use and function.[6] Otherwise, a lack of transparency may destroy the trust of the doctor-patient relationship and reduce the confidence that the patients have in the medical system. Hence, the effective communication of AI processes has to be made in order to protect this trust.

Patient privacy and data security is another of the big legal problems. AI systems in anesthesia require large amounts of sensitive health data to function effectively. This has implications with respect to breach of patient confidentiality and compliance with health care privacy laws. Departments of anesthesia and hospitals are required to have strong data protection implementations when it comes to the use of AI tools.[7]

There are also legal considerations around informed consent. Patients may need to be explicitly told when the use of AI will be part of their anesthetic treatment, and, the opportunity to withdraw from the treatment may also need to be offered. Nevertheless, it is a challenge to describe the complex mechanisms of AI to the patients in an easy-to-understand manner.[8]

Further, there is a concern regarding the possibility of bias and discrimination in AI algorithms applied for anesthesia. An AI tool learns from data; if the data is not diverse and representative of the different demographics of patients, then the system is bound to give biased recommendations. This may result in unfair treatment of specific patient populations. This has a risk of leading healthcare professionals to be exposed to liability of discrimination. For example, an AI trained primarily on adult patient data will not provide good recommendations for pediatric patients and may jeopardize their health. Such biases can be addressed only when there are diverse datasets and rigorous validation, and otherwise, AI could compromise patient safety.[9]

While AI can significantly add to the capabilities of an anaesthesiologist, the human elements that are the foundation of anesthesiology should never be replaced. Critical thinking, ethical judgment, and empathy, among other qualities, cannot be replaced by AI. In fact, the practice of anesthesiology involves making complicated decisions in dynamic and unpredictable environments where human expertise becomes invaluable. To maintain the integrity of the field, there is a need to define clear boundaries on the use of AI in clinical decision-making, double-check AI-assisted recommendations, and always disclose the use of AI tools to patients and colleagues.

As the use of AI in anesthesia practice expands, these legal and ethical considerations should be weighed carefully through policy, legislation, and regulation to safeguard the patient's safety and rights without hindering the advancement of potentially valuable AI applications in the field of anesthesia. Legal frameworks are still developing to deal with AI-involving errors in anesthesia since it is a young field. This integration requires the meeting of professional standards and adherence to ethical guidelines for introducing AI into anesthesiology in a responsible and safe manner. Many organizations have given guidelines regarding the use of AI in anesthesia. These guidelines highlight human oversight of AI-assisted decision-making, rigorous validation of AI tools before use in clinical settings, and the ethical obligation to ensure patient privacy and data security These guidelines also stress the need for rigorous validation of AI tools before their clinical implementation, ensuring that they meet high standards of safety and effectiveness. These measures will help preserve the human touch in anesthesia care, ensuring that AI remains a supportive tool rather than a replacement.[10], [11]

Current health data privacy law, such as HIPAA in the U.S., applies to patient data used to develop an AI system, but may need to be modified to fully take into account AI-specific privacy and security issues.[12] Anesthesia departments need to have strong data protection mechanisms in place when using AI applications to prevent legal problems. As AI becomes more prevalent in anesthesia practice, new legislation and case law will likely emerge to address these legal grey areas. At the moment the liability of anesthesiologists who are using AI remains cautiously and actively to be considered without well-established legal regimes for AI in clinical anesthesia.

Beyond these specific ones, general principles as established by medical and research ethics authorities are also to be considered and are vital for AI in healthcare. Transparency about AI application in clinical practice and research is also a central tenet.[13] Patients and medical staff should be informed about the use of AI tools being used to achieve clear communication between each tool and clinical decision-making. A key ethical issue is how to maintain human judgment and the physician-patient relationship so that AI can be used as a supplement and not a substitute both for medical knowledge and ethical decision-making. European Union (EU) policy documents on the regulation of AI, like the AI HLEG Ethics Guidelines, the White Paper on AI, the European Parliament (EP) Report on AI Framework, and the European Commission (EC) Proposal for the AI Act, constantly mention ideas such as transparency, explainability, and traceability.[14], [15] Transparency in AI should be regarded both as a legal principle and as a "way of thinking."

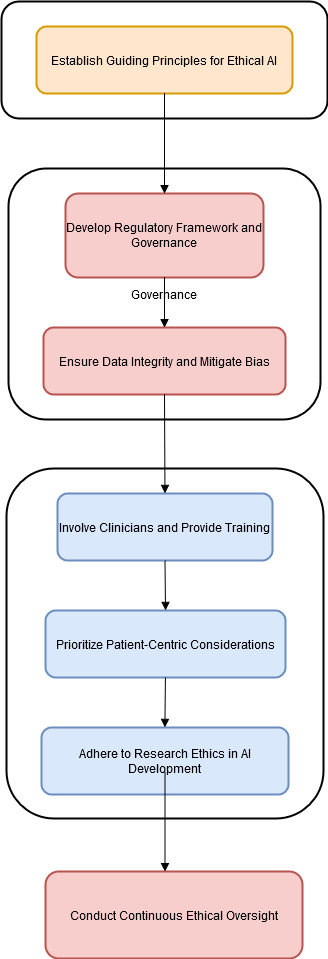

With the rising role of Artificial intelligence (AI) in the setting of anesthesia, more rigorous but practical guidelines that are more precise and clearly defined must be provided with regard to its design, introduction, and application ([Figure 1]). Until such guidelines are fully developed, anesthesiologists should exercise caution and prioritize patient safety when adopting AI technologies, ensuring that any integration of AI into clinical practice is carefully considered and ethically sound.

Conflict of Interest

None.

References

- M Cascella, MC Tracey, E Petrucci, EG Bignami. Exploring artificial intelligence in anesthesia: A primer on ethics, and clinical applications. Surgeries 2023. [Google Scholar]

- A Bihorac, T Ozrazgat-Baslanti, A Ebadi, A Motaei, M Madkour, PM Pardalos. Mysurgeryrisk: development and validation of a machine-learning risk algorithm for major complications and death after surgery. Ann Surg 2019. [Google Scholar]

- R Antel, S Whitelaw, G Gore, P Ingelmo. Moving towards the use of artificial intelligence in pain management. Eur J Pain 2024. [Google Scholar] [Crossref]

- M Kambale, S Jadhav. Applications of artificial intelligence in anesthesia: A systematic review. Saudi J Anaesth 2024. [Google Scholar]

- C Cestonaro, A Delicati, B Marcante, L Caenazzo, P Tozzo. Defining medical liability when artificial intelligence is applied on diagnostic algorithms: a systematic review. Front Med (Lausanne) 2023. [Google Scholar]

- M Khosravi, Z Zare, SM Mojtabaeian, R Izadi. Artificial intelligence and decision-making in healthcare: A thematic analysis of a systematic review of reviews. Health Serv Res Manag Epidemiol 2024. [Google Scholar]

- M Singhal, L Gupta, K Hirani. A comprehensive analysis and review of artificial intelligence in anaesthesia. Cureus 2023. [Google Scholar]

- DA Hashimoto, E Witkowski, L Gao, O Meireles, G Rosman. Artificial intelligence in anesthesiology: Current techniques, clinical applications, and limitations. Anesthesiology 2020. [Google Scholar]

- PS Varsha. How can we manage biases in artificial intelligence systems - A systematic literature review. Int J Inf Manag Data Insights 2023. [Google Scholar]

- DD Miller, EW Brown. Artificial Intelligence in Medical Practice: The Question to the Answer?. Am J Med 2018. [Google Scholar]

- EG Bignami, M Russo, V Bellini, P Berchialla, G Cammarota, M Cascella. Artificial intelligence and telemedicine in the field of anaesthesiology, intensive care and pain medicine: A European survey. Eur J Anaesthesiol Intensive Care 2023. [Google Scholar]

- S Taruc. AI in healthcare: Data privacy and ethics con. Lexalytics 2021. [Google Scholar]

- A Kiseleva, D Kotzinos, PD Hert. Transparency of AI in healthcare as a multilayered system of accountabilities: Between legal requirements and technical limitations. Front Artif Intell 2022. [Google Scholar]

- E Stamboliev, T Christiaens. How empty is trustworthy AI? A discourse analysis of the ethics guidelines of trustworthy AI. Crit Policy Stud 2024. [Google Scholar]

- . REPORT with recommendations to the Commission on a framework of ethical aspects of artificial intelligence, robotics and related technologies. (2020/2012 (INL)). 2020. [Google Scholar]